2019-2020

Self driving car and AR diagnostic software

The aim of this research project is to build a self-driving vehicle, create our own stability control system, which enables the vehicle to travel reliably and safely under extreme conditions, and to develop augmented reality-based diagnostic software for the vehicle.

Sponsors:

Participants:

- Hardware design and 3d printing

- Control system and circuit design

- Central control unit programing

- AR app development

- Communication between vehicle and phone

All rights reserved! Attention! This is an ongoing project. Some aspects may not be published, or only a censored version will be available. Results and new developments may not be published here until later. Use of the research results is subject to written permission from the author(s) responsible for the respective partial results.

Summary:

The goal of the project is to create a driverless vehicle that can independently navigate and find the safest route to its destination. A safe journey means not only avoiding obstacles and collisions, but also a stability control system that can detect anomalies (eg. wheel slip) in real time and intervene with the control system, thus avoiding things such as slipping, elevation from the ground or a possible rollover or spin.

Hardware:

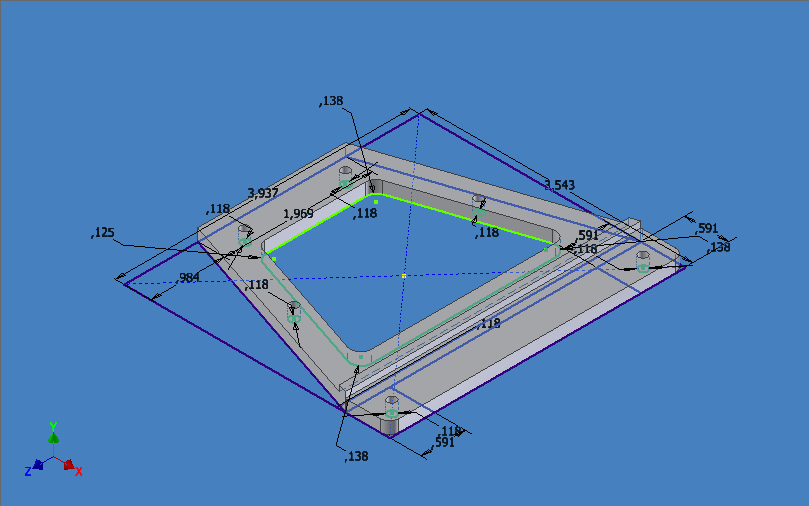

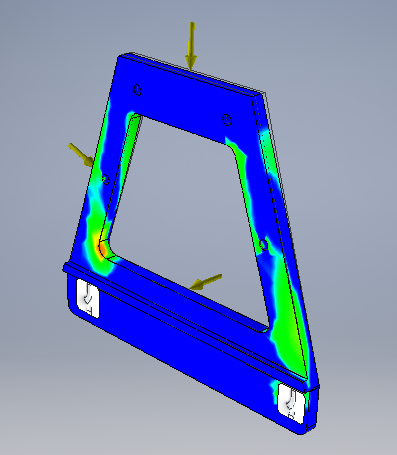

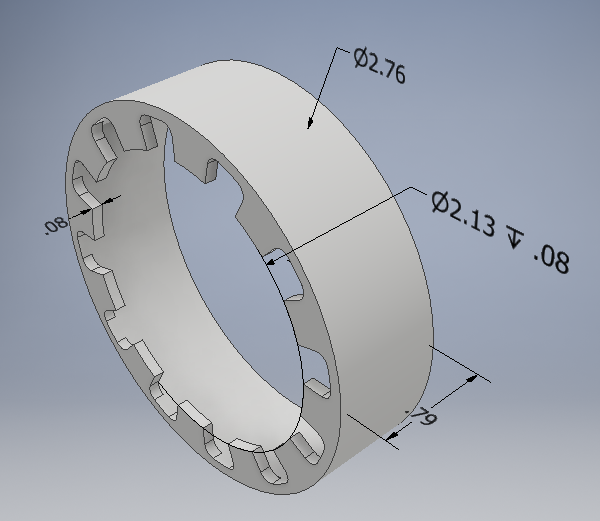

The elements used to mount the sensors and the control electronics were 3D printed and were tested during design to withstand the load and stiffness requirements.

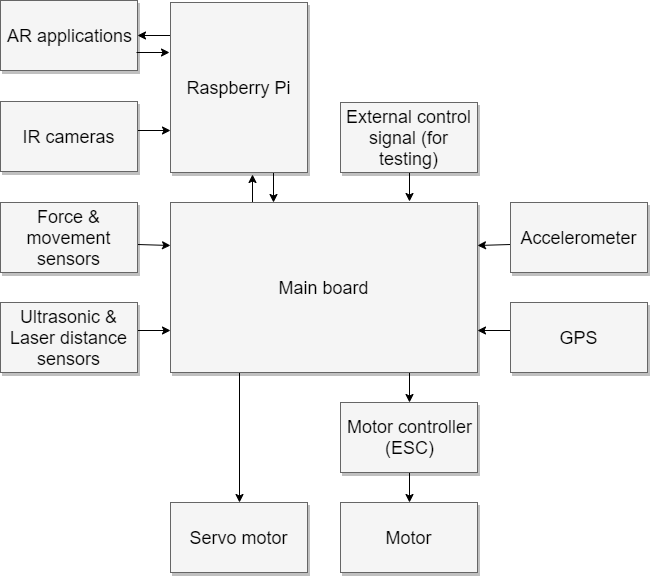

This block diagram shows the complexity of the system, and the role of the central panel, which has to be redundant in the final version, since the aim is to develop a system that could function in real-world situations and on real roads.

Software:

The Raspberry Pi is currently running a Python script that is responsible for image processing and data transfer. A separate control unit receives and preprocesses data from the sensors. There are several reasons for this: The Raspberry Pi is a small computer running a Linux based operating system. Despite regular security updates, a full-fledged computer may have software vulnerabilities that could be exploited remotely, thus potentialy taking control of the car. To prevent this from happening under any circumstances, the most critical tasks are performed by a system running software that only perform its specific task, thus avoiding inadvertently created / unmanaged vulnerabilities. Load balancing was also an important consideration when determining which control units perform what task.

Other control units in the system run a control program written in C/C ++.

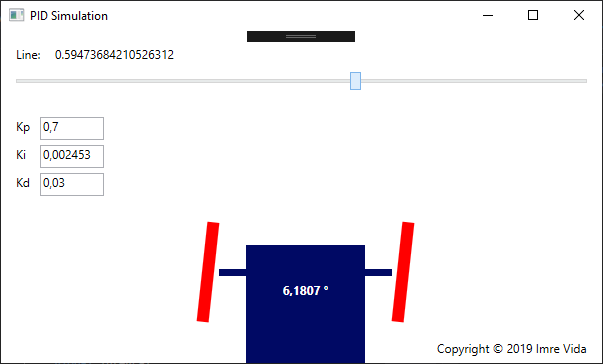

In the first development phase, the goal is to write a line tracking algorithm in which the line is recognized by the cameras and based on that a PID controller calculates the optimum wheel angle. A simulation program written in C# was developed for testing and fine-tuning the steering algorithm, which made testing and fine-tuning the PID multipliers much easier.

The augmented reality-based diagnostic software for the vehicle can help owners and mechanics better assess the condition of a vehicle. Our plan is that this utility will be able to alert the user to errors, or when a critical part is worn out, and can also be used to keep track of wear and tear.

We are currently searching for the appropriate data transfer protocol. At the moment, the sensor data is sent via a UDP socket to the diagnostic phone.

The app is currently able to display the distance traveled by the vehicle using augmented reality as shown in the video above. Augmented Reality portion was created using the EasyAR SDK, but marker optimization and alternative tracking methods are still being tested. The next step is to display the vehicle's other parameters (speed, acceleration, direction, ...) in a clear, easy-to-understand way. The movement is detected by a specially designed, wheel-mounted, incremental optical sensor on the rear wheel.

The other video shows the first test of the image processing based line tracking, which is still subject to undergo major changes. Meanwhile the vehicle is being prepared to trasmit a first person view camera image, which will be available to view in VR as well.

- Dynamic cruise control: The constant speed of the vehicle is ensured by the control electronics based on the built-in sensors, load and terrain conditions.

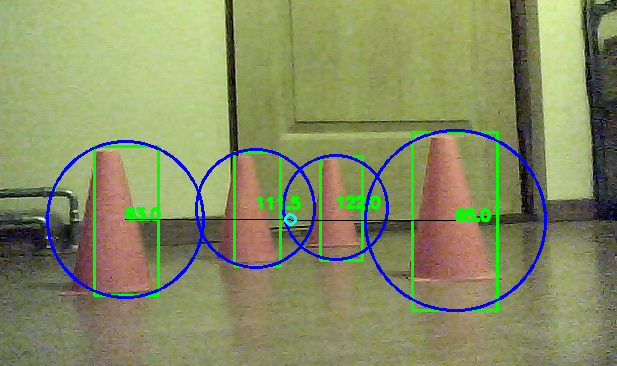

- Object recognition: The vehicle is able to detect objects in front of it with the help of the 640 * 480p camera attached to the front. The system then makes decisions based on this data, and preprogrammed rules.

- Headlights and turn signal: The front and rear of the vehicle are equipped with headlights that allow the vehicle to function in low light conditions, as well as provide external observers with clear signals of the vehicle's decisions and changes of direction.

More details available here: https://vidaimi.com/project/ugv

Tenders and competitions:

- More details soon.